.jpg)

Google’s JAX: Flexible, High-Performance Machine Learning

%20(1).png)

Server-side batching: Scaling inference throughput in machine learning

Caleb Kaiser

December 2020

How we served 1,000 models on GPUs for $0.47

Caleb Kaiser

December 2020

Designing a machine learning platform for both data scientists and engineers

Caleb Kaiser

December 2020

Netflix's Metaflow: Reproducible machine learning pipelines

Caleb Kaiser

December 2020

How to serve batch predictions with TensorFlow Serving

.png)

Caleb Kaiser

December 2020

How to build a pipeline to retrain and deploy models

Caleb Kaiser

December 2020

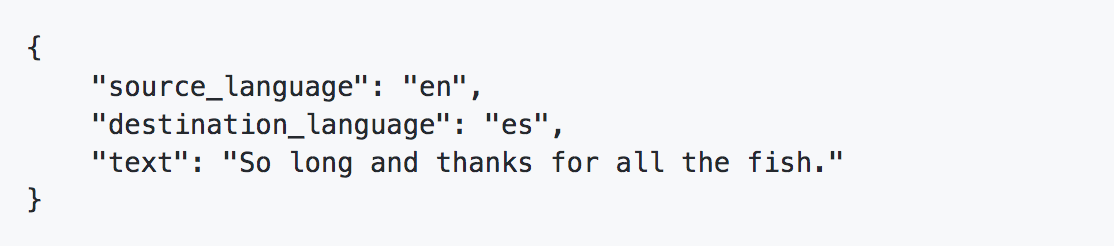

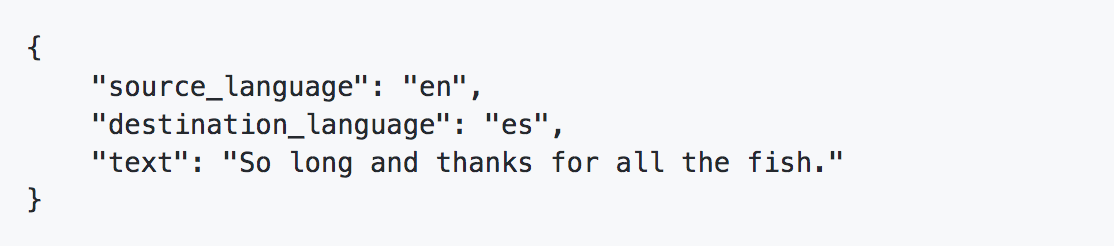

How to deploy Transformer models for language tasks

Caleb Kaiser

December 2020

Cortex 0.24: Announcing multi-cloud support

Caleb Kaiser

December 2020

How we scale machine learning model deployment on Kubernetes

Caleb Kaiser

December 2020

Why we built a serverless machine learning platform—instead of using AWS Lambda

Caleb Kaiser

November 2020

Why we don’t deploy machine learning models with Flask

Caleb Kaiser

November 2020

How to deploy machine learning models from a notebook to production

Caleb Kaiser

November 2020

Why we use YAML—not notebooks—for machine learning

Caleb Kaiser

November 2020

5 Lessons Learned Building an Open Source MLOps Platform

Caleb Kaiser

November 2020

Machine learning doesn't have to be expensive

Caleb Kaiser

November 2020

A/B testing machine learning models in production

How to deploy 1,000 models on one CPU with TensorFlow Serving

How to reduce the cost of machine learning inference

How to deploy PyTorch Lightning models to production

Improve NLP inference throughput 40x with ONNX and Hugging Face

Catching poachers with machine learning

.jpg)

%20(1).png)

.png)