For the last two years, we’ve been working on Cortex, our open source machine learning deployment platform. Over that time, we’ve been really fortunate to see it grow into what it is today, used in production by teams around the world, and supported by a fantastic community of contributors.

We’ve also had to change our thinking several times along the way. The understanding of the ML ecosystem we had at the beginning has not always turned out to be accurate, and this is reflected in various changes we’ve made to Cortex.

As interest in MLOps continues to increase, I thought it would be useful (for our sakes as much as anyone else’s) to document a few of the key lessons we’ve learned that’ve come to shape Cortex.

If you’re working on a production machine learning system, building machine learning infrastructure, or designing your own MLOps tool, hopefully the following lessons (listed in no particular order) are useful for you.

1. Production machine learning runs in the cloud

When Cortex was still in its idea stage, one of our most frequent discussions was whether or not it should support on-premise deployments. At the time, the worry was that a large portion of the machine learning ecosystem was going to remain on-premise indefinitely due to privacy and cost.

These worries were enflamed when we initially released Cortex. While we had some excited users, we also had plenty of people writing in requesting on-prem support. We worried that by going all-in on the public clouds, we’d cut off most of the machine learning ecosystem.

Over the last two years, things have changed. Production machine learning is almost entirely moving to the cloud, and there are a couple reasons why.

The first is the standard reason for moving to the cloud: scalability. As production machine learning systems become more powerful and responsible for more features, their workloads increase. If you need to autoscale to dozens of GPUs during peak hours, the cloud has obvious advantages.

The second is the investment by the major clouds into ML-specific offerings. Major clouds now offer both dedicated software and hardware for machine learning. For example, Google and AWS both offer ASICs (TPUs and Inferentia, respectively) that substantially improve machine learning performance, and both are only available on their respective clouds.

More and more, the cloud is becoming the only realistic way to deploy production machine learning systems.

2. It’s too early for end-to-end MLOps tools

Another misguided belief we held in Cortex’s early days was that Cortex needed to be an all-inclusive, end-to-end MLOps platform that automated your pipeline from raw data to deployed model.

We’ve written a full breakdown of why that was the wrong decision, but the short version is that it’s still way too early in the lifespan of MLOps to build that sort of platform.

Every page of the production machine learning playbook is constantly being rewritten. For example, in the last several years:

- Our notion of “big” models has exploded. We thought models with hundreds of millions of parameters were flirting with boundaries of being “too large” to deploy. Then Transformer models like GPT-2 started weighing in the billions—and people still built applications out of them.

- The ways we train models have changed. Transfer learning, neural architecture search, knowledge distillation—we have more techniques and tools than ever to design, train, and optimize models efficiently.

- The machine learning toolbox has grown rapidly. PyTorch was only released in 2016, shortly after TF Serving’s initial public release. ONNX came out in 2017. The frameworks, languages, and features that an end-to-end MLOps platform would need to support changes endlessly.

We ran into all of these problems with our first release of Cortex. We provided a seamless experience—if you used the narrow stack we supported. Because everything (including language, pipeline, frameworks, and even team structure) can vary so wildly across ML orgs, we were almost always “one feature away” from fitting any given team’s stack.

As a modular platform, focused on one discrete part of the machine learning lifecycle—deployment—without opinions about the rest of the stack, Cortex has been adopted by many more teams at a much faster pace. We’ve seen rapid growth in other MLOps tools with similar “best of breed” approaches at different parts of the stack, including DVC (Data Version Control) and Comet.

3. Data science, ML engineering, and ML infrastructure are all different — in theory

With Cortex, we use the following high-level model of an ML function and its constituent parts:

- Data science. Concerned with the development of models, from exploring the data to conducting experiments to training and optimizing models.

- Machine learning engineering. Concerned with the deployment of models, from productionizing models to writing inference services to designing inference pipelines.

- Machine learning infrastructure. Concerned with the design and management of the ML platform, from resource allocation to cluster management to performance monitoring.

And in theory, these are nicely delineated functions with clear handoff points. Data science creates models which are turned into inference pipelines by ML engineering and deployed to a platform maintained by ML infrastructure.

But, this is an overview of the theoretical functions in an ML org, not the actual roles people hold. Oftentimes, a data scientist will also do ML engineering work, or an ML engineer will be tasked with managing an inference cluster.

Building a tool for these different use-cases gets complex, as the optimal ergonomics of an interface for one role can vary drastically from another.

For example, for reasons we’ve explained before, Cortex APIs are written as Python scripts with YAML manifests, not notebooks, and are deployed via a CLI.

For MLEs, this is comfortable. For data scientists, however, it is often uncomfortable, as YAML and CLIs aren’t common tools in their ecosystem. Because of this, we needed to build a Python client for defining deployments in pure Python in order for some teams to use Cortex successfully.

Now, people who are more comfortable with CLIs can deploy like this:

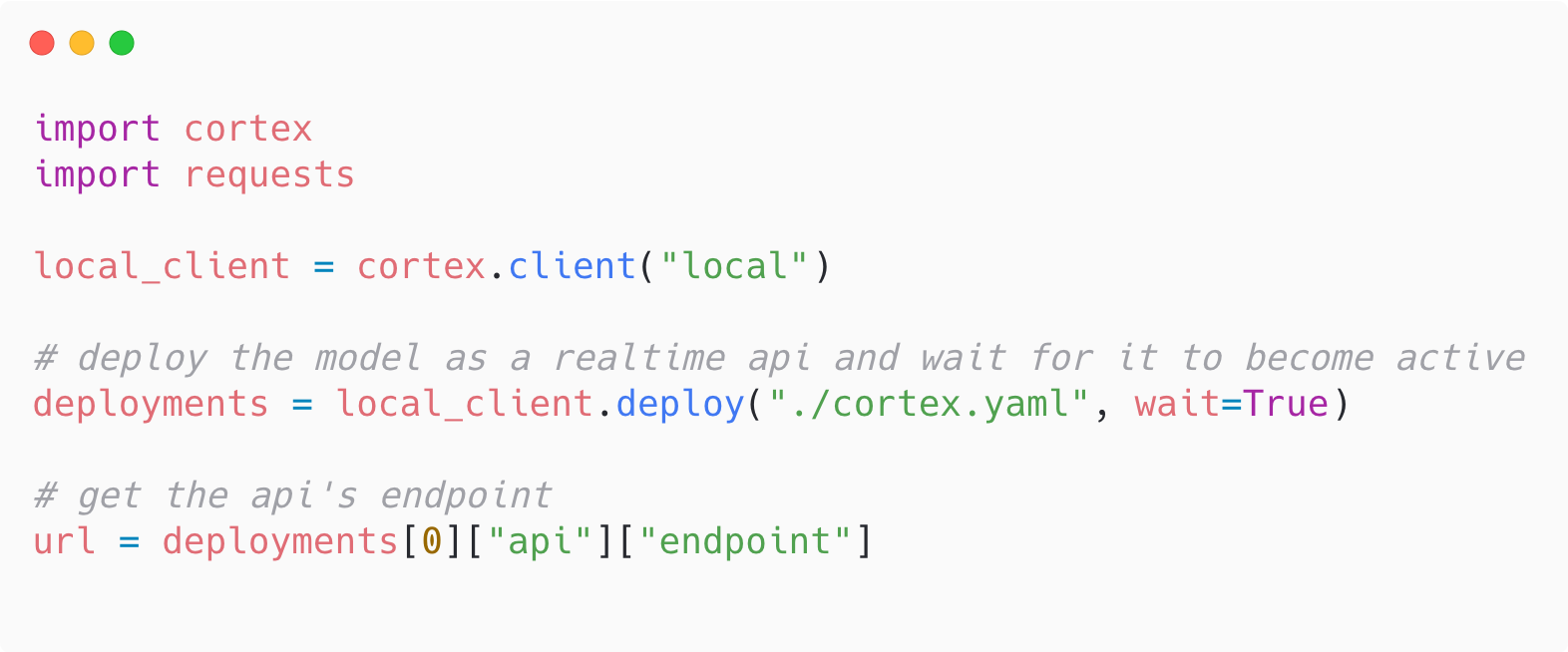

And people more comfortable with pure Python can do this:

The takeaway here is that if you’re building MLOps tooling, remember everyone who will be using it in practice, not just in theory.

4. ML native companies have different needs

Several years ago, the most common examples of production machine learning were popular products optimized by trained models. Payment processors would sprinkle in fraud detection models, streaming platforms would boost their engagement with recommendation engines, etc.

Now, however, there is a new wave of companies whose products aren’t enhanced by models—they are models.

These companies, which we refer to as ML native, operate in different ways. Some sell access to an inference pipeline as an API, as in the case of Glisten, whose API allows retailers to tag and categorize products instantly:

Others build applications whose core functionality is provided by a trained model. For example, PostEra’s medicinal chemistry platform uses models to predict the most likely chemical reactions for creating a specific drug, and AI Dungeon uses a trained language model to create an endless choose-your-own-adventure:

These ML native applications have different infrastructure needs. For one, they typically rely on realtime inference, meaning their models need to be deployed and available at all times.

Ensuring this availability can get very expensive. AI Dungeon uses a 6 GB model that can only handle a few concurrent requests and requires GPUs for inference. To scale to even a few thousand concurrent users, they need many large GPU instances running at once—something that is costly to sustain for long periods.

When we first built Cortex, we hadn’t worked with many ML native teams. After working with them, we wound up prioritizing a new set of features, many of which were at least in part aimed at helping control inference costs:

- Request-based autoscaling to optimally scale each model for spend

- Spot instance support to allow for cheaper base instance prices

- Multi-model caching, live reloading, and multi-model endpoints to increase efficiency

- Inferentia support for more cost-effective and performant instance types

As the number of ML native companies continues to rise quickly, MLOps tools and platforms are going to have to build for their needs.

5. MLOps is production machine learning’s biggest bottleneck

This is one of the few things we believed before building Cortex that we still find to be true today. It is the feasibility of building and deploying a production machine learning system prevents teams from using ML.

Training and retraining models is not cheap. Deploying models to production isn’t cheap either. Building a platform to support those deployments is a full-scale infrastructure project, one that has to be maintained moving forward.

These costs make machine learning unapproachable for most companies. and the frustrating part is that they aren’t intrinsic qualities of machine learning. We can solve them with better infrastructure—no ML research breakthroughs needed.

As the MLOps ecosystem matures, new tools will continue to abstract away these parts of infrastructure and nullify the costs that prohibit teams from using ML in production. If you want to accelerate the proliferation of machine learning, consider contributing to any of the many open source MLOps projects—like this one.

.jpg)