Resource efficiency is a primary concern in production machine learning systems. This is particularly true in the case of realtime inference, where deployed models need to perform inference on-demand with low latency.

The challenge is in balancing availability with minimalism. To maximize availability, you need enough deployed instances to perform inference on all of your models. To minimize costs, you want to deploy as few instances as possible. The tension between these two forces can be frustrating.

We’ve worked on many features to make this challenge easier in Cortex—request-based autoscaling, multi-model APIs, Spot instance support—but we’ve recently developed something new which we believe will make the balance even easier to strike.

In Cortex’s upcoming release, it will officially support multi-model caching, which will allow you to run inference on thousands of models from only a single deployed instance.

Multi-model caching: a brief introduction

The standard approach to realtime inference, which Cortex uses, is what we call the model-as-microservice paradigm. Essentially, it involves writing an API that runs inference on a model (a predictor), and deploying it as a web service.

For example, this is a TensorFlow predictor in Cortex for a simple iris classifier:

And while you can load multiple models into an API, the API is still limited to the models that it was initialized with.

In many cases, this isn’t an issue. If you have two models in your pipeline, both of which are queried frequently, then there is no problem with initializing them in their own APIs and keeping them deployed.

When this is an issue, however, is when you have many models which are infrequently queried.

For example, say you have a translation app that can translate text between 200 different languages. If you have a model trained for each language-to-language translation, that’s a lot of models.

Initializing all of them within APIs and deploying each API to production would be wastefully expensive, especially when you consider that many of them are going to be rarely queried at all.

An optimal solution would be to deploy your most popular models within their own APIs, so that they are always available, and to define another API which is capable of downloading and caching your less popular models as needed. It might add seconds to your Esperanto-to-Archi translations, but it will allow you to serve predictions from your 100s of obscure models without paying for 100s of instances.

This, in a nutshell, is what multi-model caching does. It allows an API to access models remotely, to store a certain number of them in a local cache, and to update that cache as needed. With multi-model caching, we can theoretically serve predictions from infinite models all on a single instance.

Deploying TensorFlow Serving with multi-model caching

To demonstrate multi-model caching in Cortex, I’ve put together this simple example with TensorFlow. You can use any framework, I’ve just used TensorFlow because I wanted to use Cortex’s TensorFlow Serving client.

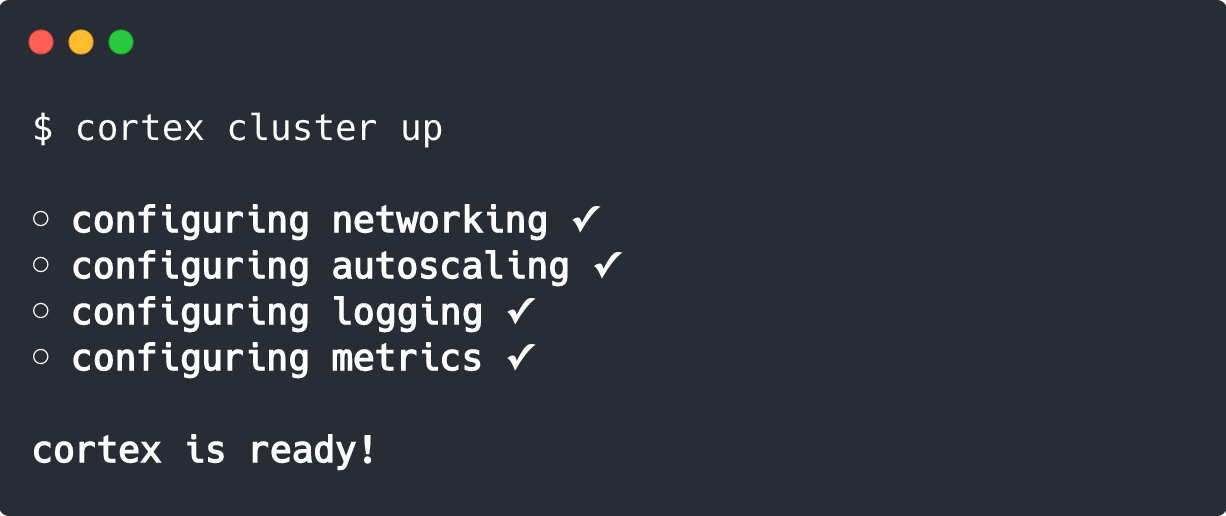

The process is almost identical to any other Cortex deployment. If you don’t already have a cluster running, initialize one:

Once your cluster is available, define your API’s configuration (I’m using the language translation example from the previous section):

As you can see, this YAML manifest is the same as any other Cortex API, with the addition of the models field. This field tells the predictor where our S3 bucket with the models is, how many models to keep in the cache, and how many models can fit on the disk. For this example, we’re assuming our instance can only fit three models.

Next, we need to actually write our API’s inference generating code:

Again, more or less the same as a normal Cortex API. We initialize a TensorFlow predictor, which gives us a TensorFlow client (a wrapper around a TensorFlow Serving container), and we initialize our encoder. In our predict() method, we pass our inputs into our TensorFlow Serving container via the TensorFlow client, and then we return the predictions.

The only difference here is that we now include the model name and version in our request to the TensorFlow client. The model name, which we generate on the application’s frontend and pass in through the initial query, tells our TensorFlow Serving container which model to access in S3, and the model version specifies which version.

If the model is already cached, the TensorFlow Serving container will run inference immediately. If not, one of the models in the cache will be removed as the new model is downloaded. Once downloaded, the TensorFlow Serving container will resume running inference.

Now, all we have to do is deploy our API to the cluster, and we’re done:

Now, if the particular requested model isn’t cached, our API will have to download the model, increasing latency for that initial request. However, this will take a fraction of the time it would take to launch an entirely new instance, and once the model is cached, subsequent requests will be initiated in realtime (unless the model is removed).

As a result, we can now run every obscure language translation model from a single instance, effectively decoupling our quantity of models from our cost of deployment.

Looking forward from here

Multi-model caching is built on top of Cortex’s new live reloading capabilities, which allows Cortex to monitor a model storage directory (like an S3 bucket) for changes and automatically update deployments.

These capabilities open the door to a variety of more complex deployment strategies, which we are eager to test and iterate on. For example, we’ve put a lot of work into our Traffic Splitter API, which allows us to split traffic between different APIs for things like A/B deployments and multi-bandit strategies. Will our ability to cache multiple versions of each model increase the complexity of our testing capabilities?

That’s just one example, but it gestures at the increased complexity that multi-model caching and live reloading allows for in ML deployments. We’re excited to roll both of these features out in our next release, and to see what the community builds with them.

.jpg)