When the cost of machine learning is discussed, it is often in the context of model training. Left out in these discussions is the bottleneck presented by inference costs, particularly in the case of realtime inference.

Working on Cortex, we’ve spent quite a bit of time optimizing infrastructure for inference cost, and we’ve seen how dozens of other ML teams have approached the problem. Here, I want to share what we’ve learned as a short checklist of opportunities for cost optimization in inference pipelines.

Depending on the level of optimization in your current pipeline, these steps could reduce inference costs by more than 80%.

What you can do to reduce inference costs

Inference costs, particularly in the case of realtime inference, are largely driven by cloud compute costs. Most of our optimizations, in that light, are going to be around reducing our cloud overhead.

Further, none of the optimizations presented below should require you to change your model architecture or train differently—our focus is on optimizations that can take place close to the deploy phase.

With that being said, let’s look at some of the most approachable optimizations we can make to inference costs.

1. Cut out premium-charging tooling

The lowest hanging fruit from a cost perspective is simply switching from deployment platforms that charge high premiums (this will also enable more optimizations down the line, which I’ll get into later). I’ll pick on SageMaker a bit here, as most people will be familiar with it.

When a model is deployed on SageMaker, it runs on normal EC2 instances, but SageMaker charges an extra 40% for facilitating the deployment. A g4dn.xlarge instance in us-east-1 has an on demand price of $0.526 per hour on EC2. On SageMaker, it costs $0.736 per hour.

That’s a 40% increase in price that you can avoid by deploying to EC2 directly.

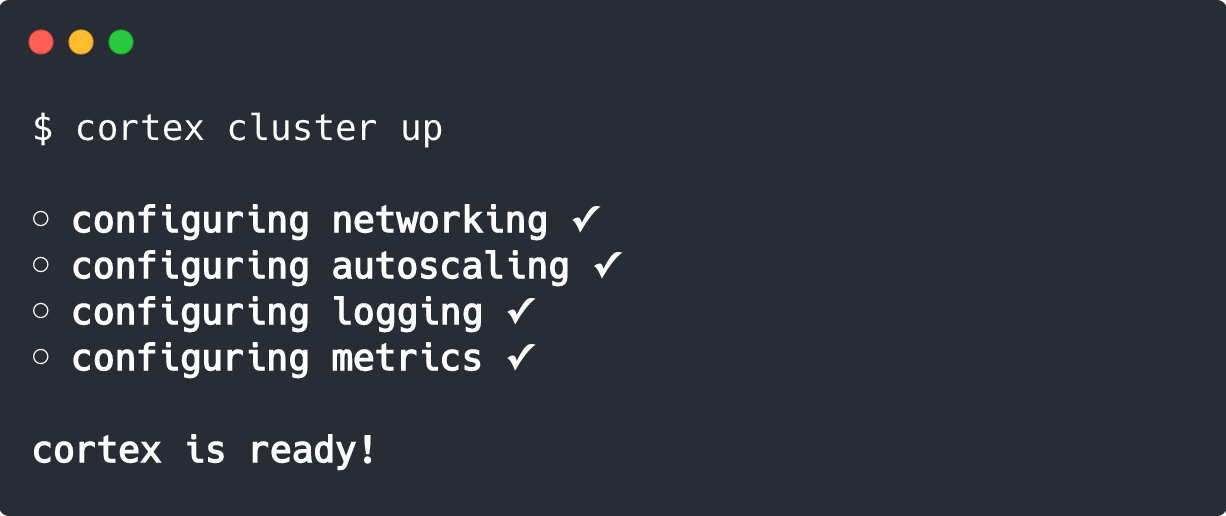

The tradeoff here used to be that deploying directly to EC2 required you to build your own infrastructure. This, however, is no longer the case. You can deploy your pipeline to a cluster provisioned with EC2 instances without setting any of it up yourself—and without paying SageMaker premiums—by simply using open source infrastructure like Cortex.

By switching from SageMaker to Cortex, you’ll instantly reduce your bill by~40%.

2. Optimize autoscaling behavior

If your compute bill is largely driven by the number of instances you have spun up at any given time, lowering that number is an obvious optimization.

Most public clouds provide an out-of-the-box autoscaler for different services, but the way autoscaling is standardly implemented is suboptimal for inference workloads.

Most autoscalers work based on resource utilization, spinning up new instances as your CPUs approach 100% utilization. This causes two issues in machine learning:

- Many models rely on GPUs or ASICs for inference, making CPU utilization a useless metric.

- Instances can contain many inference APIs, each with different resource needs.

To elaborate on the second point, imagine we have two APIs, one using DeepSpeech for speech-to-text, and the other using BERT for NLP. DeepSpeech is fairly small (less than 100 MB), but it has very high latency. BERT, being very large but very fast, can comparatively serve more concurrent requests per API.

If we scale based on instance-level resource utilization, we won’t know that we need more DeepSpeech APIs and not more BERTs. As a result, we’ll scale up several instances with unused BERT APIs, overpaying.

What would be more optimal would be to scale on the API level. One way to do that—and the way Cortex implements autoscaling—is to autoscale based on the length of an API’s request queue, factoring in the number of concurrent requests an API can handle.

In other words, if the Cortex autoscaler knows your DeepSpeech API can only handle two concurrent requests, and there are 30 in the request queue, Cortex will scale your DeepSpeech API up. Because the DeepSpeech API is smaller, Cortex doesn’t need to spin up as many EC2 instances to host it.

This paradigm, which we call request-based autoscaling, allows you to pay for the absolute minimum amount of instances.

3. Optimize models for inference

Another obvious optimization is to simply make our models smaller and faster. Naturally, we ideally want to do this without accuracy tradeoffs (though for many applications, minor decreases in accuracy are worth major increases in performance).

There are a few common ways to do this.

The first is via knowledge distillation. You could (as many have) write thousands of words explaining knowledge distillation, but at a high level, a distilled model is one that was trained by on a parent model’s predictions, instead of ingesting the parent model’s dataset, resulting in a theoretically smaller model. DistilBERT, developed by the Hugging Face team, is 40% smaller, 60% faster, and 97% as accurate as the normal BERT.

The second popular method is to quantize a neural network, typically by converting it from floating point decimals to fixed precision integers. Basically, it approximates a smaller, faster version of a neural network. For example, ONNX provides a quantizer specifically for Transformer models that can reduce the size of BERT by roughly 400%.

Finally, we can serve models using model servers and runtimes optimized for inference. For example, ONNX Runtime has built in optimizations for running inference on Transformer models. I recently benchmarked this and found that by converting a vanilla PyTorch model to ONNX, optimizing it with ONNX’s quantizer, and serving it through ONNX Runtime, inference throughput was increased by 40x—which is the difference between spending $175,200 a year on inference costs and only spending $14,046.

4. Deploy to spot instances

Finally, we can conserve costs by simply paying less for our instances.

Spot instances are unused instances that AWS offers at a steep (as in 40–70%) discount. The tradeoff is that AWS can recall them at any time, but with proper infrastructure, this isn’t much of a concern, namely because:

- Inference is a read-only operation. Switching between instances will not cause us to lose vital state.

- Configured correctly, our clusters will self-heal, redirecting traffic from recalled instances as new ones are spun up.

To enable spot instances in Cortex, all we need to do is toggle a field in our cluster configuration from false to true. Optionally, we can also customize things like whether or not our cluster should default to on-demand instances should Spot instances be unavailable, or whether there are other types of instances we can substitute should our preferred instance type be unavailable for Spot:

With Spot instances, you can easily slash 40% of your inference bill. To see a real world example of this, Spot instances played a big part in AI Dungeon’s solution to controlling inference costs at scale.

Inference costs are an infrastructure problem

Looking at the state of an average ML team’s infrastructure, there is plenty of low hanging fruit in terms inference cost optimization.

Switching to an open source deployment platform can reduce prices 40%. Using Spot instances can reduce another 40%. Optimizing resource allocation and model serving can increase throughput by factors of 10.

When you add all of that together, you end up with an inference bill that is a fraction of what you started with, all for relatively little work.

.jpg)