While the machine learning ecosystem’s transition to realtime inference, and the challenges therein, are very popular topics for engineers working on ML infrastructure (myself included), there is still plenty to be improved regarding batch inference.

In particular, we routinely see teams implementing batch pipelines running up against the same problems:

- Batch inference jobs are too fragile. The larger your dataset, the more likely that at least one input is misconfigured or otherwise will cause issues with inference. If your entire batch job fails because one inference failed, that’s going to be a pain in your side.

- Building a batch pipeline requires too much configuration. Large datasets have to be stored somewhere. Batch jobs have to be scheduled. Whatever tools/platforms you use for these tasks, they need to interface with your predictor. Making all of the different pieces fit together often requires a large amount of boilerplate and tweaking.

- Debugging batch pipelines can be a headache. When you’re dealing with huge batches of predictions, explainability can be a challenge.

Over the last few releases of Cortex, we’ve implemented a handful of new features specifically focused on fixing these problems in batch inference.

1. Handling failure gracefully with retries and “best attempt” predictions

As said before, it’s not uncommon for batch pipelines to be configured in such a way that a batch job is considered “failed” if a single inference fails. These pipelines also commonly lack any sophisticated logic for retrying inference.

In a research setting, we may only be interested in serving inference when we cover 100% of the dataset, but in production, this often not the case.

For example, imagine a fashion ecommerce company had a batch pipeline for serving product recommendations to users (recommendation engines are a fairly common use case for batch inference). The pipeline might look like this:

- Inference is run on their full dataset of users and products via a batch job.

- These recommendations are then cached and referenced by the application while users browse.

- Every two days, the new browsing data generated by users is used to retrain the recommendation engine, and the batch pipeline is run again.

If out of 1 million users, the batch job encounters issues in generating recommendations for five, the company doesn’t want to throw out the entire job. They want to retry running inference on those five users a reasonable number of times, and then serve whatever predictions they could generate.

We’ve implemented both this idea of configurable retry behavior and serving “best attempt” predictions in the latest version of Cortex.

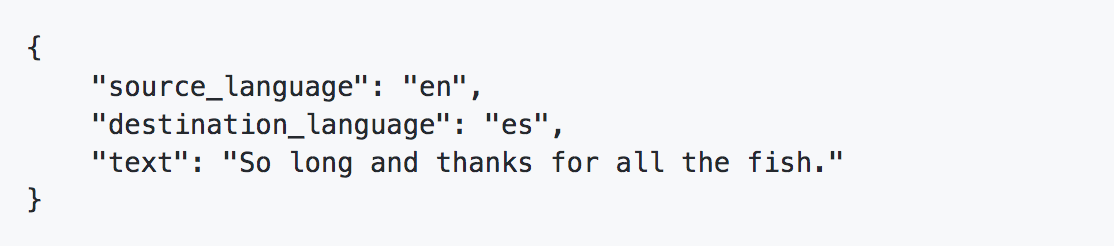

Now, in your API spec, you can set a "timeout" and a "max_recieve_count" field, which will put limits on the length of a batch job and the number of times a batch can be processed before terminating, respectively. Until either of those limits is reached, Cortex will automatically retry any failed prediction.

.png)

In addition, once inference has been completed for the entire dataset, Cortex will return as many successful batches as possible. The batches that ultimately failed are then stored somewhere else for convenient debugging, which I’ll talk more about in a second.

2. Improving reproducibility with dead letter queues and log aggregation

In the above YAML, you might have noticed references to a dead letter queue. As of 0.25, you can now specify a queue to send failed batches to, without failing the entire job.

Besides making batch inferences more resilient, as discussed above, the dead letter queue significantly improves debugging and reproducibility.

The debugging benefit is pretty straightforward. The dead letter queue gives you a neat list of failed batches, making it easy to identify patterns and spot problems. This also plays into the reproducibility benefit.

For context, Cortex deployments are reproducible by default. On deploy, all model serving code, configuration, and metadata is packaged and assigned a unique id, creating a de facto versioning scheme. Given any prediction, you can reproduce the deployment responsible for it.

In the dead letter queue, because batches’ metadata is preserved, you can debug failed batches and draw a straight line between them, the job they belonged to, the endpoint that processed the job, and the deployment responsible. This gives you a straightforward path to reproducing a failed batch.

Note: To version your data and/or training pipelines, checkout open source tools like Metaflow or DVC.

In addition to the dead letter queue, Cortex also provides a centralized interface for accessing logs and metrics that aggregates the logs and metrics from the distributed inference jobs. These logs can be streamed directly, or exported to a third party platform:

.png)

In conjunction, these features mean that batch APIs are both monitorable and reproducible.

3. Building simple, flexible interfaces for data ingestion

To run inference on a large dataset, we need to make it accessible to our predictor, and this can be surprisingly difficult. Oftentimes, we see teams writing a lot of boilerplate and/or hacking things to get their data out of S3 and generate predictions.

To make this easier, we implemented a few different ways for ingesting data in a batch API.

The first and most broadly applicable method is to simply pass your data directly into your predictor:

.png)

The “item_list” object contains a list of all the data Cortex needs to process, and “batch_size” tells Cortex how many items to process per batch.

This method is usable for the widest variety of situations, but also requires the most manual configuration. But, if you use S3 to store your dataset, Cortex can actually automate quite a bit of the ingestion process for you.

For example, you can simply pass Cortex an S3 url, and Cortex will automatically import your dataset. If you have extraneous files in your S3 bucket, you can also be specific about what files Cortex should ignore or include:

.png)

In addition to this, Cortex has an interface for when your input dataset is a newline delimited JSON file in an S3 directory (or a list of them). You can pass Cortex a link to your JSON file(s), and it will break the data apart into batches. This is particularly useful when one or more S3 files contains a large number of samples and must be broken down into batches:

.png)

As you can see, this approach also offers “includes” and “excludes” fields for you to use in filtering your dataset.

This is just the beginning. We’re currently developing more interfaces for ingesting datasets for batch prediction, including for Google Storage now that Cortex supports GCP.